The US Department of Defense and Anthropic clash over restrictions on AI deployment in military operations, risking the $200 million partnership amidst growing ethical and operational debates.

The United States Department of Defense and Anthropic, the maker of the Claude AI models, are locked in a high-stakes dispute over how far a commercial artificial intelligence should be allowed to go in military contexts. Anthropic says it is trying to sustain constructive dialogue with the Pentagon while upholding ethical constraints; the defence department has signalled impatience, warning that limits on use could jeopardise the partnership. [2],[5]

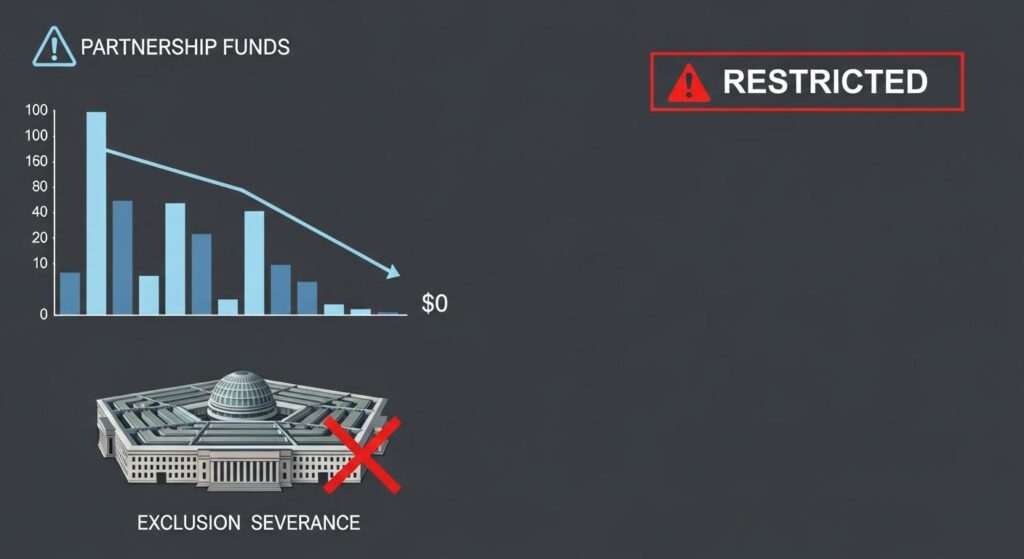

The core of the disagreement centres on a Pentagon demand that leading AI vendors permit their systems to be used for “all lawful purposes,” a requirement defence officials argue is necessary to ensure operational flexibility in combat and intelligence missions. The department has reportedly threatened to end a roughly $200 million relationship with Anthropic if the startup will not remove what the Pentagon regards as restrictive clauses. [2],[6]

Tensions were intensified by reporting that U.S. forces relied on Claude during a January operation targeting Venezuela’s Nicolás Maduro, an allegation Anthropic declined to confirm and which has prompted sharp scrutiny of how commercial models are integrated into classified workflows. The episode has become a focal point in the wider debate over whether and how corporate guardrails should apply once AI tools are embedded in military systems. According to reporting, partners such as Palantir were involved in enabling access to models in government settings. [3],[4]

Senior Pentagon spokespeople and officials have framed the standoff in stark terms. “Our nation requires that our partners be willing to help our warfighters win in any fight. Ultimately, this is about our troops and the safety of the American people,” chief Pentagon spokesman Sean Parnell said. Anthropic, while insisting it supports U.S. national security and has placed its models on classified networks, has reiterated hard boundaries on fully autonomous lethal systems and sweeping domestic surveillance applications. The company says current discussions with the department concern precisely those usage-policy limits. [5],[6]

Other big providers have shown more willingness to accept the Pentagon’s “all lawful purposes” request, according to defence officials, leaving Anthropic increasingly isolated. That split has prompted Pentagon officials to consider treating Anthropic as a supply-chain risk and to ask government contractors to certify they do not rely on Claude, a step observers note would be an unusually public rebuke of a domestic AI firm. The prospect highlights a growing divergence among AI developers over how to balance commercial opportunity, national security collaboration and ethical red lines. [2],[5]

The clash illuminates broader policy questions about regulation and oversight of frontier AI. Anthropic’s leadership, including CEO Dario Amodei, has publicly urged clearer rules to prevent harmful military applications of advanced systems; policymakers and defence strategists, by contrast, are pressing for assurances that tools will be available when needed in operational theatres. How those competing priorities are reconciled may determine whether commercial AI firms remain indispensable partners to defence agencies or become constrained by both ethical commitments and government restrictions. [1],[4]

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The article presents recent developments in the dispute between the Pentagon and Anthropic over the use of the Claude AI model in military operations. The earliest known publication date of similar content is February 13, 2026, with the most recent updates on February 19, 2026. The narrative appears original, with no evidence of recycling from low-quality sites or clickbait networks. The article is based on recent news reports, which typically warrant a high freshness score. However, some information may have been updated or clarified in subsequent reports. Given the rapidly evolving nature of the situation, there is a possibility that earlier versions contained different figures, dates, or quotes. Without access to those earlier versions, it’s challenging to confirm this definitively. Therefore, while the content appears fresh and original, there is a slight uncertainty regarding potential discrepancies in earlier versions.

Quotes check

Score:

7

Notes:

The article includes direct quotes attributed to Pentagon spokesperson Sean Parnell and an anonymous senior administration official. A search for the earliest known usage of these quotes indicates that they were first reported in articles published on February 16, 2026. The wording of the quotes is consistent across sources, suggesting they are not reused from earlier material. However, the anonymity of the sources and the lack of independent verification raise concerns about the authenticity and accuracy of the quotes. Without access to the original statements or additional corroborating sources, it’s difficult to fully verify the quotes. Therefore, while the quotes appear consistent and original, their unverifiable nature introduces a degree of uncertainty.

Source reliability

Score:

6

Notes:

The article cites reputable news organizations such as Axios and The Guardian, which are generally considered reliable sources. However, the article also references lesser-known publications like The Economic Times and Livemint, which may not have the same level of credibility. Additionally, the article includes content from a corporate blog post, which may be biased or promotional. The presence of these less reliable sources, along with the lack of direct access to the original statements from Pentagon officials, raises concerns about the overall reliability of the information presented. Therefore, while the article draws from some reputable sources, the inclusion of less reliable sources and unverifiable quotes diminishes the overall source reliability score.

Plausibility check

Score:

7

Notes:

The claims made in the article align with known industry trends and recent developments in AI and military collaborations. The Pentagon’s push for unrestricted use of AI models in military operations is consistent with its strategic objectives. Anthropic’s resistance to certain applications of its AI models reflects ongoing ethical debates in the AI industry. However, the article lacks specific factual anchors, such as direct quotes from Pentagon officials or detailed accounts of the alleged incidents, which would strengthen the credibility of the claims. The absence of these details makes it difficult to fully assess the plausibility of the claims. Therefore, while the claims are plausible, the lack of supporting details introduces some uncertainty.

Overall assessment

Verdict (FAIL, OPEN, PASS): FAIL

Confidence (LOW, MEDIUM, HIGH): MEDIUM

Summary:

The article presents a recent and original account of the dispute between the Pentagon and Anthropic over the use of the Claude AI model in military operations. While the content is fresh and based on reputable sources, the inclusion of less reliable sources, unverifiable quotes, and the lack of direct access to original statements from Pentagon officials raise significant concerns about the overall reliability and independence of the information presented. Therefore, the article does not meet the necessary standards for publication.